The social distancing, mask-wearing, and spike in screen time during COVID prompted fears of a social and emotional recession for children and adolescents, who today say they missed out on the formative milestones that build social skills and emotional resilience.

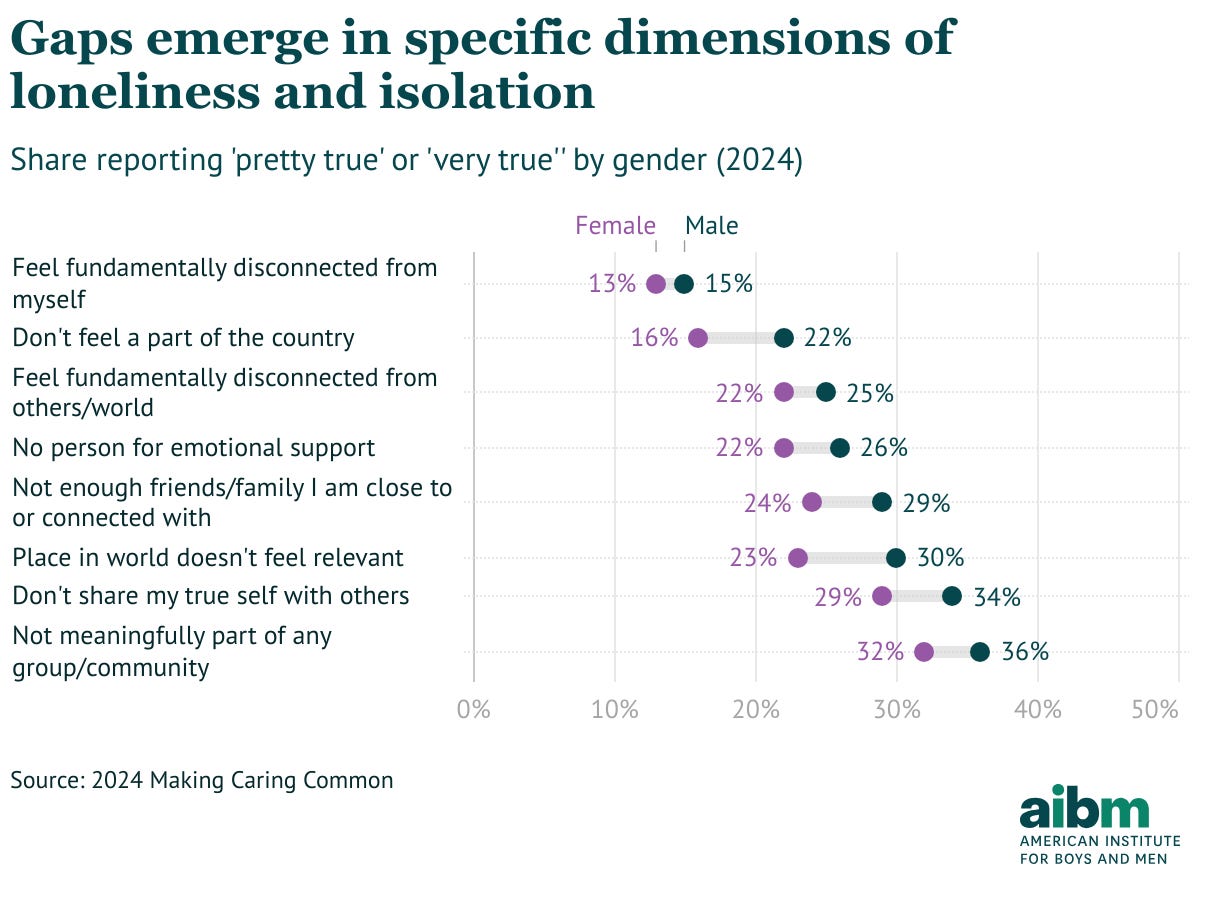

In the years since, Pew surveys have consistently found that nearly half of U.S. teens report being online “almost constantly.” Mental health diagnoses have outpaced the growth of service providers. And rates of loneliness and isolation have increased, especially for young people. More than a third of men say they do not feel meaningfully part of any group or community. When asked who or what they think contributes to loneliness in America, technology tops the list.

Into that context comes a new kind of relationship: AI companions designed to provide emotionally-tailored support and simulate reciprocal relationships. According to a new report we commissioned from behavioralist Dr Rupert Gill:

Roughly three in four U.S. teens have used an AI companion

About half are now regular users

One in five say they spend as much or more time with AI companions as with human friends

Among top AI apps, a notable share are AI companions, not productivity apps

Our new report explains what that shift means for boys and young men in particular, at a moment when friendship networks are thinning, loneliness is widespread and in-person emotional support is stretched. AI companions function less like digital assistants and more like digital painkillers, capable of providing relief from loneliness, but also of producing dependence and delaying the development of coping skills.

I hope you enjoy this edited version of our Substack Live conversation in which we discuss:

The similarities between the emotional tactics of AI companions and romance scammers

The promise of AI companions to build up our social skills, confidence, and self-awareness

The risk of AI companions displacing our human relationships and financially exploiting our emotional vulnerability

The current market incentives — and a possible regulatory framework — to incentivize the design of AI companions for emotional wellbeing rather than dependency and displacement.

I encourage you to read Rupert’s full commentary on the AIBM website and the extended report for further analysis of the evidence gaps to fill.

Thanks to Jim Geschke, Hunter, Matthew Allaire, and many others for tuning in live. As a reminder, you can subscribe to our podcast feed on Spotify, Apple Podcasts, or wherever you feed your queue.